Category: 1990s

Epson PhotoPC

Previously on Electric Thrift I mentioned that I passed on buying a Sony Mavica at Goodwill because it was missing the power supply and proprietary batteries. I’m very glad I didn’t buy that camera because shortly after that I found an even older digital camera!

The oldest digital camera I can remember seeing was the Apple QuickTake 100 my 5th grade teacher, Mr. Bennett used in about 1995. The second oldest digital camera I’ve ever seen is this Epson PhotoPC I recently found at Village Thrift.

Village Thrift has a “showcase” which is a section of supposedly more expensive items positioned on shelves behind a counter so that you have to ask the cashier to take a closer look. This creates a dilemma because often times it can take awhile to get the attention of the cashier. You have to really want to see an item in the showcase to justify waiting but the items are kept too far away to get a good enough look to really get interested in them.

I had seen the PhotoPC box back there for several weeks and never grasped the age of the thing. Luckily there’s this place where the showcase’s counter ends and sometimes items spill over from the showcases’s rear shelves onto the normal, more accessible shelves. That’s when I finally go a close look at the box and realized this was a digital camera box with screenshots from Windows 3.1! “Copyright 1995 Epson America, Inc.” This is a survivor from the digital camera Jurassic period.

We tend to think of digital cameras as springing into existence as luxury objects in the late 90s, hitting their prime in the 2000s as people realized the utility of getting photos online and becoming ubiquitous after 2007 as the cheap ones were integrated into smartphones and tablets and the more expensive DSLRs overtook their film counterparts.

According to digicamhistory.com this was among the first digital cameras under $500. You can see how they needed to make the camera extremely simple to meet that price. Even though other contemporary digital cameras like the QuickTake 100 had LCD screens, the Epson PhotoPC has none. There’s just a conventional viewfinder. As a result, you can’t review photos you’ve taken on the camera itself, you have to connect to a PC. There is however, a button that deletes the last photo taken. Additionally, because there’s no screen you have just a tiny LCD display to tell you how the battery is doing, if the flash is on, how many shots you’ve taken, and how many shots you have left.

This is an esoteric analogy but the Epson PhotoPC reminds me of the Ryan Fireball: That was a bizarre Navy fighter aircraft with both a piston engine and a jet; the PhotoPC is a cheap 90s auto focus point-and-shoot camera that just happens to be digital. They’re both weird artifacts of a transitional time.

When you do connect the PhotoPC to a PC to look at the photos, you’re connecting with a serial cable because this camera predates USB. For that matter, it also predates Compact Flash. There’s 1MB flash memory built into the camera and an slot to add an additional 4MB of flash on a proprietary “PhotoSpan” memory module. My camera has an empty expansion slot so the built-in 1MB of flash holds a mere sixteen 640×480 photos.

My parents old Dell Pentium III (which you may recall from the Voodoo 2 post) runs Windows 98 so I installed the EasyPhoto software, hooked up the serial cable and pulled some pictures off of the PhotoPC. I can tell you that pulling photos off of this thing via serial cable is a lot like watching paint dry. I watched the progress bar snail it’s way across the screen and was pleased when it got to 100%…when I realized that was just for one photo. One 640×480 photo. If you’ve got a full camera with 16 photos to transfer you might as well go make yourself a sandwich and catch an inning of the ballgame while that transfers.

However, when you think about the PhotoPC in context even this molasses pace would have seemed Earth-shattering in 1996. Imagine you were one of the people venturing out onto the Internet back then. If you wanted to post a digital photo to a website or attach it to an email you would have to either:

- Take a roll of photos on a film camera, have them developed, then scan them, and then presumably crop the photo and resize it for the Internet.

- Take photos with a Polaroid camera, scan them, and then presumably crop the photo and resize it for the Internet.

I’ve done enough scanning to know that that would be immensely time consuming. Once you had a digital camera, even a barebones one such as the PhotoPC you could almost go straight from taking a photo to getting it on the Internet.

Not only that but you could take 16 photos, spend 15-20 minutes transferring them to the PC, wipe the camera and take another 16 photos, over and over. Sure, this would be no good on your trip to the Grand Canyon but if you were having a family reunion at your house or another scenario where you’re close to a PC this would have seemed miraculous.

So, what do photos taken with the Epson PhotoPC look like? A lot like the photos I remember seeing on the Internet in the late 90s: A bit fuzzy. Strange color artifacts. Not great focus.

Keep in mind that in 1996 you’d be lucky to have been running 800×600 at 32bit color on your monitor. Back then, 640×480 images were serious business.

The wonderful thing about finding this camera is how it is so utterly an artifact of the past but also totally tied to today. The PhotoPC was one of rat-like mammals that scurried amongst the film dinosaurs. A film camera and my iPad are of two totally different eras, but the PhotoPC and my iPad are clearly distant but related ancestors.

Apple Macintosh Quadra 700 and AppleColor High-Resolution RGB Monitor (Part I)

Sometime between 2003 and 2006 I found this Apple Macintosh Quadra 700 at the old State Road Goodwill in Cuyahoga Falls. According to this Macintosh serial decoding site my Quadra (serial # F114628QC82) was the 7012th Mac built in the 14th week of 1991 in Apple’s Fremont, California factory.

It looks like I paid $15 for the Quadra and the massive Apple Multiple Scan 17 CRT monitor that came with it.

In fact, the Multiple Scan 17 is so large and hulking that I don’t feel like dragging it out of the closet to take a better photo.

After I started this blog I dragged over most of the vintage Mac stuff out of my parents’ attic to my apartment. I decided that the Quadra 700 should get a semi-permanent place on my vintage computing desk. The desk (which you’ve probably seen in the Macintosh SE and PowerBook G3 entries) has a credenza that limits how deep of a monitor I can use. The Multiple Scan 17 doesn’t leave enough space for the keyboard and really restricts what else I can have on the desk.

Originally my plan was to use the Quadra with an HP 1740 LCD monitor I picked up at the Kent-Ravena Goodwill so I bought a DB-15 to HD-15 (VGA) converter.

However, while digging through the Mac stuff in my parents’ attic I made an interesting discovery. Unbeknownst to me I owned AppleColor High-Resolution RGB 13″ monitor.

When I was still living with my parents there wasn’t really a lot of room in my bedroom for all of the vintage computing stuff I had accumulated. Often, I would lose interest in something and it would go into the attic.

At some point my Dad must have brought home this monitor from a thrift store. Unlike most CRT monitors where the monitor cable is attached to the monitor this one has a detachable cable which was lost when he bought it (I have since purchased a replacement on eBay). With all of the Mac stuff put away and no monitor cable to test it with, it joined everything else in the attic and I forgot about it.

Years later when I stumbled upon it deep in the shadows of a poorly lit part of the room, I thought it was the cheaper Macintosh 12″ RGB monitor that went with the LC series. But then, I saw the name plate on the back.

This was an amazing stroke of luck because that’s a damn fine monitor. Back in the late 80s this was one of Apple’s high end Trinitron monitors. Remember those Apple brochures my mother got in West Akron in 1989 from the Macintosh SE entry?

I’m fairly sure that the monitor sitting on the IIx in that picture is the AppleColor Hi-Res 13″. In fact, if you flip over that brochure, there it is, listed as the AppleColor RGB Monitor.

For reasons that will become obvious in a moment, the AppleColor RGB fits very nicely on the top of the Quadra 700 when it’s positioned as a desktop rather than a mini-tower.

There’s some scratches and scuffs on the monitor but for the most part it works and looks spectacular.

This monitor is a classic piece of Snow White era Apple design. My favorite thing about this monitor are the large brightness and contrast dials it has on it’s side.

Apple also sold a rather attractive optional base for the AppleColor RGB monitor with great Snow White detailing, as seen in this drawing from Technical Introduction to the Macintosh Family: Second Edition.

Unfortunately I’ve never seen that base come up on eBay.

Oddly enough, when I ventured further into my parent’s attic I found a box of Macintosh stuff that a college roommate had recovered from being trashed at a college graphics lab that contained, among other things, the manual for this model of monitor.

The Quadra 700 is one of my all-time favorite thrift store finds. It was the first extremely serious Macintosh I have owned from the expandable 680X0 era (roughly from 1987 to 1994 when Apple moved to PowerPC CPUs). Previously the most powerful Mac I had found was a Macintosh LC III with a color monitor. That machine introduced me to what the experience of using a color Macintosh had been like in the early 1990s but the Quadra was on another level entirely.

To put this in perspective: Macintosh LC III was a lower-end machine from 1993 that gave you something like the performance of a high-end Macintosh from 1989. The Quadra 700 (along with the Quadra 900 which was basically the same guts in a larger, more expandable case) was Apple’s late 1991 high-end machine. When it was new, the Quadra 700 cost a staggering $5700, without a monitor. The monitor could easily add another $1500.

In order to talk about the importance of the Quadra I have to go back to the Macintosh II series, which I also discussed in the Macintosh SE entry.

Apple created a lot of machines in the Macintosh II series and it’s a bit difficult to keep track of them. As you can see in the brochure, the original machine was the Macintosh II, built around Motorola’s 68020 processor and for the first time in the Macintosh, a fully 32-bit bus. That machine was succeeded the following year by the Macintosh IIx, which, like all following Macintosh II models used the 68030 processor. The II and the IIx both had six NuBus expansion slots, which is why their cases are so wide.

If you’re more familiar with the history of Intel processors don’t let the similar numbering schemes lead you into thinking the 68020 was equivalent to a 286 and the 68030 was equivalent to a 386. In reality the original Macintosh’s 68000 CPU would be more comparable to the 286 while the 68020 and 68030 were comparable to the 386. In the numbering scheme that Motorola was using at the time processors with even numbered digits in their second to last number like the 68000, 68020 and 68040 were new designs and processors with odd numbers like 68010 and 68030 were enhancements to the previous model. The 68030 gained a memory mapping unit (MMU) which enabled virtual memory. The jump from the 286 to the 386 was much greater than the jump from the 68020 to the 68030.

The next machine in the series was the Macintosh IIcx in 1989, which basically took the guts of the IIx and put them in a smaller case with only three expansion slots (hence, it’s a II-compact-x). Like the II and the IIx, the IIcx had no on-board video and required a video card to be in one of the expansion slots.

Later that year Apple reused the same case for the Macintosh IIci, which added on-board video.

The case used in the Macintosh IIcx and IIci was designed to match in color, styling, and size the AppleColor High Resolution RGB monitor I have, as seen in this illustration from Technical Introduction to the Macintosh Family: Second Edition.

As you probably caught onto by now the Quadra 700 uses the same case as the Macintosh IIci but with the Snow White detail lines and the Apple badge turned 90 degrees, turning it into a mini-tower. That’s why the monitor matches the Quadra so well.

The last Macintosh to use the full-sized six-slot Macintosh II case was the uber-expensive Macintosh IIfx in 1990. It used a blistering 40MHz 68030 and started at $8970.

However, if you bought a IIfx, you may have felt very silly the next year when the Quadra series based on the new 68040 processor came out and succeeded the Macintosh II series.

The 68040, especially the full version of the chip with the FPU (floating point unit) that the Quadra 700 used, was a huge jump in processing power.

According to these benchmarks at Low End Mac, the 25MHz 68040 in the Quadra 700 scores 33% higher than the Macintosh IIfx’s 40MHz 68030 on an integer benchmark and five times as fast on a math benchmark. Plus, it was just over half the price of the IIfx.

The interior of the Quadra 700 is extremely tidy. The question the hardware designers at Apple were clearly working with was: what is the most efficient case layout if you need to pack a power supply, a hard disk, 3.5″ floppy drive, and 2 full-length expansion slots in a case? In the Quadra 700 the two drives are at the front of the right side of the case, the PSU is at the back of the right side, and the two expansion slots take up the left side of the case.

You can tell how the arrival of CD-ROM drives threw a wrench in all of this serene order. You’re never going to shoe-horn a 5.25″ optical drive in this case. And when you do get a CD drive in the case you’re going to have an ugly looking gap for the drive door rather than just the understated slot for the floppy. I think Apple’s designs lost a lot of their minimalist beauty when they started putting CD drives in Macintoshes soon after the Quadra 700.

Inside the case, the way everything is attached without screws is very impressive. The sides of the case and the cage that hold the drives forms a channel that the PSU slides into. Assuming nothing is stuck you should be able to pull out the PSU, detach the drive cables, and then pull out the drive cage in a few short minutes without using a screwdriver (actually, there’s supposed to be a screw securing the drive cage to the logic board but it was missing in mine with no ill effects).

We tend to think of plastic in the pejorative. But, plastic is only cheap and flimsy when it’s badly done. This Quadra’s case is plastic done really, really well. It doesn’t flex or bend. It’s rock solid. But, when you pick the machine up it’s much lighter than you expect it to be.

Recently, I needed to replace the Quadra 700’s PRAM battery, which apparently dated from 1991.

The battery is located under the drive cage so this was a nice opportunity to remove the power supply and drive cage to see the whole board.

The new battery is white, located in the bottom right hand corner.

Looking at the whole board there are two really interesting things to note here.

First the logic board itself is attached to the rest of the plastic case using plastic slats and hold-downs. Had I wanted to remove the logic board and I knew what I was doing, I could probably do that in a few minutes.

Second, notice the six empty RAM slots. Curiously enough, on the Quadra 700 the shorter memory slots just above the battery are the main RAM. I believe this machine has four 4MB SIMMs in addition to 4MB RAM soldered onto the logic board (the neat horizontal row of chips labeled DRAM to the left of the SIMMs on the bottom of the picture) The larger white empty slots are for VRAM expansion.

You can tell from this series of articles (that I assume were posted on newsgroups back in 1991) written by one of the Quadra 700’s designers how proud they were about the video capabilities of the Quadra 700 and 900.

He makes three major points:

- The way the video hardware talks to the CPU makes it really, really fast compared to previous Macintoshes with built-in video and even expensive video cards for the Macintosh II series.

- The Quadra’s video hardware supports a wide variety of common resolutions and refresh rates including VGA’s 640×480 and SVGA’s 800×600. That’s why I can use the Quadra with that VGA adapter pictured above. This was neat stuff in an era when Macintoshes tended to be very proprietary.

- If you fully populate the VRAM slots (which gives you a total of 2MB VRAM) you can use 32 bit color at 800×600.

Point 3 just blows me away. To put that in perspective, the Matrox Mystique card that my family bought in 1997 or so had 2MB VRAM and did 800x600x32-bit color. There’s a good reason the Quadra 700 was so outrageously expensive. If you were a graphics professional and you needed true color graphics, Apple would gladly make that happen for the right price.

There is a person on eBay selling the VRAM SIMMs that the Quadra uses. It would probably cost me about $50 to populate those RAM slots. It’s very tempting.

I’m planning on doing another entry on the Quadra 700 sometime in the future to talk about what actually using this machine is like.

A Meditation on Racing Videogames

I’ve been on a videogame kick recently, so I ask if you will indulge me once again. The post on the Saturn, and my memories of Daytona USA and Wipeout specifically, stirred my thoughts about the racing game genre.

I own a few racing videogames.

It hadn’t actually occurred to me quite how many racing games I own until I tried to gather most of them in one place to get this picture. I also have several groups of games not shown here:

- A whole era of PC racing games from 1997-2005 or so like Rally Trophy, Motorhead, Rallisport Challenege, Colin McRae 3, and others I don’t have the boxes for at my apartment.

- A whole group of PC racing games like Midtown Madness that I just have in jewel cases from thrift stores.

- More recent purchases like Dirt 2, Blur, Fuel, and Grid 2 that I only own digitally.

I’m not really a big car guy or even someone who really enjoys driving in real life. It’s not really the cars that draw me into racing games.

I think what it comes down to is that I love playing videogames but I hate the “instant death” mechanic in most types of games.

That is to say that if Mario falls down a hole, he’s dead and you have to go back to the start of the level. If a Cyber Demon’s rocket hits Doom Guy he’s dead and you go back to the start to the level. In the vast majority of games the punishment for failure is that you the player are ripped away from whatever you’re doing and you lose progress in some way.

Racing games are in many ways the opposite of this. If you go off the road or hit a wall generally you lose time and you’ll probably not win the race, but the the priority is to politely get you going on your way again. Even in games like San Francisco Rush, where you can hit a wall and explode, you are quickly thrust back into the race somewhere further down the track. It’s like if you spilled a glass of water at a nice restaurant and the waiter quickly comes over to mop it up and replace your drink. You and he both know you just did a silly thing but he wants you back enjoying yourself as soon as possible.

That does not mean that these games are “soft”; it’s just that they don’t believe that constantly slapping you across the face is good value for money.

I mentioned in the Sega Saturn post that I don’t tend to like 2D games. The vast majority of racing games operate from an inherently 3D perspective that places the camera either behind the car or on the car’s bumper. In the pre-3D era there were attempts to do racing games with 3D-ish perspectives using 2D graphics, and I own a few such as Pole Position II, and Outrun.

It was difficult for 2D games to draw a convincing curving road so these games tend to make the player avoid traffic rather than attempt to portray realistic driving.

Racing games as we know them today trace their ancestry back to arcade games like Namco’s Winning Run (1988) and Sega’s Virtua Racing (1992) that were among the first to use 3D graphics and be able to draw a road in a more realistic way.

When consoles started using 3D graphics a nice looking racing game was a surefire way for console makers to show off their technology. A nicely rendered car on some nicely rendered road with some glittering buildings behind it is much less likely to trigger the uncanny valley than a rendering of a human. I think we can all agree that games like Gran Turismo 5 have come closer to real looking car paint than any game has come to real looking human skin.

Throughout the 1990s racing games exploded into several distinct sub-genres:

Arcade style games are the direct descendants of Virtua Racing and Winning Run, which is where the sub-genre derives it’s name. These days, these games are seldom released in arcades. Arcade-style racing games are not expected to have realistic handling but instead have handling designed for fun more than thought. The brake peddle/button in many of these games is a mere formality. Early on in the 3D racing era companies like Namco and Sega added drift mechanics to their games where you could toss the car around corners at speed rather than braking realistically. Arcade racers like Daytona USA and Ridge Racer were very popular in the 32-bit era (Playstation, N64, Saturn) but the arrival of mainstream simulation racers drew attention away from these games in the late 1990s and early 2000s (which sadly meant many people overlooked the fantastic Ridge Racer Type 4). The arrival of Burnout 3: Takedown in 2004 reinvigorated the sub-genre by allowing you to knock other cars off the road rather than just trying to pass them.

Beginning with games like Driver, free-roaming racing games left the predefined track and let the player roam though cities and other environments. Crazy Taxi (1999) combined the arcade-style with free-roaming forcing the player to memorize routes through a large city in order to pick up and deliver fares. Test Drive Unlimited (2006) allowed players to drive around the whole island of Oahu. Burnout Paradise (2008) put the frantic Burnout style of arcade racing into an open city.

Kart racing games (named after Super Mario Kart) tend to have arcade style handling but in most cases have a recognized character like Mario or Sonic sitting in a tiny car. In Kart racers it’s expected that you can driver over or through symbols/objects on the track to pick up weapons, which you can use to slow down or otherwise befuddle opponents, or drive over speed pads which give you a nitro boost. In Kart games the strategy of using the weapons is as important or more important than your ability to guide the car. The Kart racing genre is 90% Mario Kart and 10% everyone else. If you’ve never played them, I recommend Sega’s two recent kart racing games: Sonic and Sega All-Stars Racing and it’s sequel Sonic & All-Stars Racing Transformed.

Futuristic racing games are a close cousin to Kart racers because they also allow you to pick up weapons on the track to hurt opponents. Generally futuristic racing games have more realistic graphics than Kart games and a cyberpunk/dystopian visual atmosphere accompanied by a electronica soundtrack. Wipeout is the patron saint of futuristic racing games.

Simulation racing games seek to exactly simulate the handling characteristics of a real car. Games like the Gran Turismo series, F355 Challenge and the famous Grand Prix Legends take great pride in the way they have exactly replicated real tracks and the handling of real cars on those tracks. There are actually people who have become real drivers after learning in these games. Gran Turismo popularized the idea that mainstream simulation games should have dozens of realistically rendered sports cars that need to be collected by the player. In Gran Turismo the incentive to race is to collect cars. Today Microsoft’s Forza series and Sony’s Gran Turismo are the kings of mainstream simulation racing.

There is another sub-genre that doesn’t really have an established name that is somewhere between the simulation and arcade styles of handling. I like to call it sim-arcade. The Dreamcast’s Metropolis Street Racer is an early example of this type of game. Codemasters’ Grid and Grid 2 are more recent examples. In sim-arcade racing games you still have to think about your line and braking correctly for the turns but it’s not quite as anal about it as the simulation games. A lot of people confuse this style for the arcade-style because they assume that any game that is not full-on simulation must be arcade style. A good rule of thumb is that if you don’t have to use the brake except to trigger a drift, you’re playing an arcade style racing game. If you have to actually brake for a turn and you’re not playing a sim, it’s probably this sim-arcade style. There can be a arrogance among simulation players that more realistic games are better but I tend to really enjoy the sim-arcade style.

Descending from 1995’s Sega Rally Championship rally racing games are intended to replicate rally driving on off-road surfaces. The actual sport of rally racing takes place as a series of time trials cars drive individually but some of these games allow players to drive against other cars as well. The Sega Rally games take a more arcade approach to handling while Codemasters’ Colin McRae Rally and Dirt games take a more balanced approach somewhere between sim and arcade. Today Dirt 3 and Dirt Showdown basically own this genre.

I wish I could say that the current state of the racing game genre is as rich and vibrant as it was in the past. There is a crunch going on in the videogame industry where game budgets are increasing faster than sales and the large videogame publishers increasingly feel they can’t take risks about what their customers will buy. I suspect that racing games have become labeled risky niche products while military-style first person shooters like Call of Duty and third person action games like Assassin’s Creed are commanding big budget development dollars.

In 2010 when Disney and Activision put out well-advertised arcade-style racing games (Split/Second and Blur, respectively) sales were abysmal and the excellent studios behind those games (Black Rock and Bizarre Creations, respectively) were closed. The fact that both games looked superficially similar, which confused potential customers and the fact that both games were released in the same week up against the blockbuster hit Red Dead Redemption does not seem to have entered any executives minds as to why sales were poor. A chill seemed to descend upon the arcade racing subgenre after that with only Electronic Arts carrying the torch after that with their Need for Speed games.

The videogame playing public, it seems, are sick of just going around in circles and developers can’t seem to figure out what to do next.

Lately developers have been keen to invent shockingly dumb and bizarre plots to justify racing games:

- In Split/Second the game is supposed to be some massive reality TV show where the producers have conveniently rigged and entire abandoned city to explode while daredevils race through it in order to provide interesting television.

- In Driver: San Francisco the main character is actually having a coma dream where he believes he can jump into the consciousnesses of drivers in San Francisco and complete tasks in their cars. I wish I was making this up.

Racing games, like puzzle games, are probably better off without plots.

Still, I think racing games need a kick in the pants in order to stay a relevant mainstream genre.

In other genres, like side-scrolling shooters and platformers the influence of indie developers are helping those genres find the souls they had lost under the weight of big budget design by committee. I’m hoping something similar happens with racing games. I am extremely enthusiastic about the 90s Arcade Racer game on Kickstarter that is a love letter to the arcade racing games that Sega made in the late 1990s (Super GT/SCUD Race and Daytona USA 2) that they were too stubborn to release on consoles.

Sega Saturn

This is my Sega Saturn, which I bought used at The Record Exchange (now simply The Exchange) on Howe Rd. in Cuyahoga Falls in October 1999.

I know this because I saved the date-stamped price tag by sticking it on the Saturn’s battery door.

The Saturn was Sega’s 32-bit game console, a contemporary of the better known Nintendo N64 and the Sony PlayStation. It lived a short, brutish existence where it was pummeled by the PlayStation. In the US the Saturn came out in May 1995 and was basically dead by the end of 1998.

The Saturn was the first game console that I truly loved. Keep in mind that I bought mine after the platform was dead and buried and used game stores were eager to unload most of the games for less than $20. If I had paid $399 for one brand new in 1995 with a $50 copy of Daytona USA I might have different feelings.

The thing that makes the Saturn intensely interesting is how it was simultaneously such a lovable platform and a disaster for Sega. It’s a story about what happens when executives totally misunderstand their market and what happens when you give great developers a limited canvas to make great games with and they do the best they can.

When you look at the Saturn totally out of it’s historical context and just look at on it’s own, it’s a fine piece of gaming hardware. Compared to the Sega CD it replaced the quality of the plastic seems to have been improved. The Saturn is substantial without being outrageously huge. The whole thing was built around a top-loading 2X CD-ROM drive.

It plays audio CDs from an on-screen menu that also supports CD+G discs (mostly for karaoke).

It has a CR2032 battery that backs up internally memory for saving games, accessible behind a door at the rear of the console.

It has a cartridge slot for adding additional RAM and other accessories like GameSharks.

There were several official controllers available for it during it’s lifetime.

The two I own are the this 6 button digital controller:

And the “3D controller” with the analog stick that was packaged with NiGHTS:

You can see the clear family resemblance to the Dreamcast controller.

My Saturn is a bit odd because at some point the screws that hold the top shell to the rest of the console sheared off.

That’s not supposed to happen. All that’s holding the two parts of the Saturn together is the clip on the battery door. Fortunately this gives me an excellent opportunity to show you what the inside of the Saturn looks like:

Despite all of this, my Saturn still works after at least 15 years of service.

I should explain why I was buying a Saturn for $25 at The Record Exchange in 1999. You might say that several decisions by my parents and misguided Sega executives led to that moment.

My parents never bought my brother and I videogames as children. I’m not sure if they thought games were time wasters or wastes of money. Or, it could have just been they didn’t have any philosophical problem with them but they were uncomfortable buying a toy more expensive than $100. Whatever the reason we didn’t have videogames. Considering how many awful games people dropped $50 on in the pre-Internet days when there was so little information about which games were worth buying, I can see their point. I also know now that there were plenty of perfectly good games that were so difficult that you might stop playing in frustration and never get your money’s worth out of them.

Instead, videogames were something I would only see at a friend’s house…and when those moments happened they were magical.

I can’t speak for women of my generation but at least for a lot of males of the so-called “Millennial generation” videogames are to us what Rock ‘n Roll was to the Baby Boomers. They are the cultural innovation that we were the first to grow up with and they define us as a generation. If you’re looking for particular images that define a generation and I say to you “Jimi Hendrix at Woodstock” you think Baby Boomers. If I say to you “Super Mario Bros.” you think Millennials.

By 1996 or so I was pretty interested in buying some sort of videogame console, but I was somewhat restricted in what I could afford. The first game system I bought was a used original Game Boy in 1996. However, shortly after that my family got a current PC and my interests shifted to computer games: Doom, Quake, etc, which is what eventually led to the Voodoo 2.

By 1998 I noticed how dirt cheap the Sega Genesis had become so my brother and I chipped in together to buy a used Genesis (which I believe we bought from The Record Exchange). I quickly found that I did enjoy playing 2D games but I was really enjoying the 3D games I was playing on PC.

Sega did an oddly consistent job of porting their console games to PC in the 1990s, so I had played PC versions of some of the games that came out on Saturn in the 1995-1998 timeframe including the somewhat middling PC port of Daytona USA

So in 1999 when I came across this used Saturn for a mere $25 at The Record Exchange, I was eager to buy it.

But why was the Saturn $25 when a used PlayStation or N64 was most likely going for $80-$100 at the same time?

As I noted in the Sega Genesis Nomad post, Sega was making some very strange decisions about hardware in the mid-1990s. At that time Sega was at at the forefront of arcade game technology. Recall that in the Voodoo 2 post I said that if you sat down at one of Sega’s Daytona USA or Virtua Fighter 2 machines in 1995 you were basically treated to the most gorgeous videogame experience money could by at the time. That’s because Sega was working with Lockheed Martin to use 3D graphics hardware from flight simulators in arcade machines.

At the same time as they were redefining arcade games Sega was busy designing the home console that would succeed the popular Genesis (aka the console people refer to today as simply “the Sega”). Home consoles were still firmly rooted in 2D, but there were cracks appearing. For example, Nintendo’s Star Fox for the Super Nintendo embedded a primitive 3D graphics chip in the cartridge and introduced a lot of home console gamers to 3D, one slowly rendered frame at a time. Sega pulled a similar trick with the Genesis port of Virtua Racing, which embedded a special DSP chip in the cartridge (you may remember this from the Nomad post):

Sega decided on a design for the Saturn which would produce excellent 2D graphics with 3D graphics as a secondary capability. The way the Saturn produced 3D was a bit complicated but basically it could take a sprite and position it in 3D space in such a way that it acted like a polygon in 3D graphics. If you place enough of these sprites on the screen you can create a whole 3D scene.

I can see in retrospect how this made sense to Sega’s executives. People like 2D games, so let’s make a great 2D machine. They also must have considered that 3D hardware on the same level as their arcade hardware was not feasible in a $400 home console.

However, Sega’s competitors didn’t see things that way. Sony and Nintendo both built the best 3D machines they could, 2D be damned. One would expect their did this largely in response to the popularity of Sega’s 3D arcade games.

The story that’s gone around about Sega’s reaction to this is that in response they decided to put a second CPU in the Saturn. I have no idea if that’s why the Saturn ended up with two Hitachi SH-2 CPUs, but it would make sense if was an act of desperation.

Having two CPUs is one of those things that sounds great but in reality can turn into a real mess. A CPU is only as fast as the rest of the machine can feed it things to do. If say, one CPU is reading from the RAM and the other can’t at the same time, it sits there idle, waiting. There are also not that many kinds types of work that can easily be spread across two CPUs without some loss in efficiency. If the work one CPU is doing depends on work the other CPU is still working on the first CPU sits there idle, waiting. These are problems in computer science that people are still working furiously on today. These were not problems Sega was going to solve for a rushed videogame console launch 19 years ago.

The design they ended up with for the Saturn was immensely complicated. All told, it contained:

- Two Hitachi SH-2 CPUs

- One graphics processor for sprites and polygons (VDP1)

- One graphics processor for background scrolling (VDP2)

- One Hitachi SH-1 CPU for CD-ROM I/O processing

- One Motorola 68000 derived CPU as the sound controller

- One Yamaha FH1 sound DSP

- Apparently there was another custom DSP chip to assist for 3D geometry processing

That’s a lot of silicon. It was expensive to manufacturer and difficult to program. The PlayStation, which started life at $299, had a single CPU and a single graphics processor and in general produced better results than the Saturn.

Sega had psyched itself out. Here the company that was showing everyone what brilliant 3D arcade games looked like failed to understand that they had actually fundamentally changed consumer expectations and built a game console to win the last war, so to speak.

When the PlayStation and N64 arrived they ushered in games that were built around 3D graphics. Super Mario 64, in particular made consumers expect increasingly rich 3D worlds, exactly the type of thing the Saturn did not excel at.

Sega had gambled on consumers being interested in the types of games they produced for the arcades: Games that were short but required hours of practice to master. By 1997-1998 consumers’ tastes had changed and they were enjoying games like Gran Turismo that still required hours to master but offered hours of content as well. 1995’s Sega Rally only contained four tracks and three cars. 1998’s Gran Turismo had 178 cars on 11 tracks.

Sega’s development teams eventually adapted to this new reality but it was too late to save the doomed Saturn. Brilliant end-stage Saturn games like Panzer Dragoon Saga and Burning Rangers would never reach enough players’ hands to make a difference.

For the eagle eyed…This is a US copy of Burning Rangers with a jewel case insert printed from a scan of the Japanese box art.

By Fall-1999 the Saturn was dead and buried as a game platform. Not only had it failed in the marketplace but it’s hurried successor, the Dreamcast, was now on store shelves. That’s why a used Saturn was $25 in 1999.

The thing was that despite the fact that the Saturn had failed, the games weren’t bad, and since I was buying them after the fact they were dirt cheap. I accumulated quite a few of them:

Oddly enough, my favorite Saturn game was the much criticized Saturn version of Daytona USA that launched with the Saturn in 1995.

The original Saturn version of Daytona USA was a mess. Sega’s AM2 team, who had developed the original arcade game had been tasked with somehow creating a viable Saturn version of Daytona USA. The whole point of the game was that you were racing against a large number of opponents (up to 40 on one track). The Saturn could barely do 3D and here it was being asked to do the impossible.

The game they produced was clearly a rushed, sloppy mess. But it was still fun! The way the car controls is still brilliant even if the graphics can barely keep up. I fell in love with Daytona. Later Sega attempted several other versions of Daytona on Saturn and Dreamcast but I vastly prefer the original Saturn version, imperfect as it may be.

Another memorable game was Wipeout. To be honest, when I asked to see what Wipeout was one day at Funcoland I had no idea that the game was a futuristic racing game. I thought it had something to do with snowboarding!

Wipeout was a revelation. Sega’s games were bright and colorful with similarly cheerful, jazzy music. Wipeout is a dark and foreboding combat racing game that takes place in a cyberpunk-ish corporate dominated future. I still catch myself humming the game’s European electronica soundtrack. The game used CD audio for the soundtrack so you could put the disc in a CD player and listen to the music separately if you wished. Wipeout was the best of what videogames had to offer in 1995: astonishing 3D visuals and CD quality sound.

From about 1999 to 2000 I had an immense amount of fun collecting cheap used Saturn classics like NiGHTS, Virtua Cop, Panzer Dragoon, Sonic R, Virtua Fighter 2, Sega Rally, and others…As odd as this is to say, the Saturn was my console videogame alma mater.

Today I understand that something can be a business failure but not a failure to the people who enjoyed it. To me, the Saturn was a glorious success and I treasure the time I have had with it.

3DFX Voodoo2 V2 1000 PCI

This is a 3DFX Voodoo 2 V2 1000 PCI still sealed in the box.

I actually own three Voodoo 2’s. The first one is a Metabyte Wicked 3D (below, with the blue colored VGA port) that I bought from a friend in high school. The second one is the new-in-box 3DFX branded Voodoo 2 I bought off of ShopGoodwill last year. The third one (below, with the oddly angled middle chip) is a Guilliemot Maxi Gamer 3D2 I bought at the Cuyahoga Falls Hamfest earlier this year.

The Voodoo 2, in all of its manifestations, is my favorite expansion board of all time. It’s one of the last 3D graphics boards that normally operated without a heatsink so you can gaze upon the bare ceramic chip packages and the lovely 3DFX logos emblazoned upon them. It was also pretty much the last 3D graphics board where the various functions of the rendering pipeline were still broken out into three separate chips (two texture mapping units and a frame buffer). The way the three chips are laid out in a triangle is like watching jet fighters flying in formation.

It’s hard to explain to someone who wasn’t playing PC games in the late-1990s what having a 3DFX board meant. It was like having a lightswitch that made all of the games look much, much better. There are perhaps three tech experiences that have utterly blown my mind. One of them was seeing DVD for the first time. Another is seeing HDTV for the first time. Seeing hardware accelerated PC games on a Voodoo 2 was on the same level.

A friend of mine in high school won the Metabyte Wicked 3D in an online contest. I remember the day it arrived at his house I had walked home from school trudging up and down piles of snow that had been piled up on the sidewalk to clear the roads and I got home exhausted…And he calls me asking if I wanted to come over as he installed the Voodoo 2 card and fired up some games. Even though I was exhausted I eagerly accepted.

I think I saw hardware accelerated Quake 2 that day…Nothing else would ever be the same. I was immensely jealous.

Ever since personal computers have been connected to monitors there has been some sort of display hardware in a computer that output video signals. Often times this hardware included capabilities that enhanced or took over some of the CPU’s role in creating graphics.

When we talk about 2D graphics we mean graphics where for the most part the machine is copying an image from one place in the computer’s memory and putting int another place in the computer’s memory. For example, if you imagine a scene from say, Super Mario Bros. the background is made up of pre-prepared blocks of pixels (ever notice how the clouds and the shrubs are the same pattern or pixels with a color shift?) and Mario and the bad guys are each particular frame in a short animation called a sprite. These pieces of images are combined together in a special type of memory that is connected to a chip that sends the final picture to the TV screen or monitor.

It’s sort of like someone making one of those collages where you cut images out of a magazine and paste them on a poster-board. The key to speeding up 2D graphics in a computer is speeding up the process of copying all of the pieces of the image to the special memory where they need to end up. You might have heard about the special “blitter” chips in some Atari consoles and the famous Amiga computers that made their graphics so great. 2D graphics were ideal for the computing power of the time but they give videogame designers limited ability to show depth and perspective in videogames.

Outside of flight simulator games and the odd game like Hard Drivin and Indy 500 almost all videogames used 2D graphics until the mid-1990s. PC games during the 2D graphics era were mostly being driven by the CPU. If you bought a faster CPU, the games got more fluid. There were special graphics boards you could buy to make games run faster, but the CPU was the main factor in game performance.

Beginning in about 1995-1996 there was a big switch to 3D graphics in videogames (which is totally different than the thing where you wear glasses and things pop out of the screen…that’s stereoscopics) and that totally changed how the graphics were being created by the computer. In 3D graphics the images are represented in the computer by a wireframe model of polygons that make up a scene and the objects in it. Special image files called textures represent what the surfaces of the objects should look like. Rendering is the process of combing all of these elements to create an image that is sent to the screen. The trick is that the computer can rotate the wireframe freely in 3D space and then place the textures on the model so that they look correct from the perspective of the viewer, hence “3D”. You can imagine it as being somewhat akin to making a diorama.

With 3D graphics videogame designers gained the same visual abilities as film directors: Assuming the computer could draw a scene they could place the player’s perspective anywhere they desired.

The problem with 3D graphics is that they are much more taxing computationally than 2D graphics. They taxed even the fastest CPUs of the era.

In 1995-1996 when the first generation of 3D games started appearing in PCs they looked like pixelated messes on a normal computer. You could only play them at about 320×240, objects like walls in the games would get pixelated very badly when you got close to them, and the frame rate was a jerky 20 fps if you were lucky. Games had started using 3D graphics and as a result required the PC’s CPU to do much, much more work than previous games. When Quake, one of the first mega-hit 3D graphics-based first person shooters came out it basically obsoleted the 486 overnight because it was built around the Pentium’s floating-point (read: math) capabilities. But even then you were playing it at 320×240.

At the same time arcade games has been demonstrating much better looking 3D graphics. When you sat down in front on an arcade machine like Daytona USA or Virtua Fighter 2 what you saw was fluid motion and crisp visuals that clearly looked better than what your PC was doing at home. That’s because they had dedicated hardware for producing 3D graphics that took some types of work away from the CPU. These types of chips were also used in flight simulators and they were known to be insanely expensive. However, by the time the N64 came out in 1996 this type of hardware was starting to make it’s way into homes. What PCs needed was their own dedicated 3D graphics hardware. They needed hardware acceleration.

That’s what the Voodoo 2 is. The Voodoo 1 and it’s successor the Voodoo 2 were 3DFX’s arcade-quality 3D graphics products for consumer use.

A texture mapping unit (the two upper chips labeled 3DFX on the Voodoo 2) takes the textures from the memory on the graphics board (many of those smaller rectangular chips on the Voodoo 2) and places them on the wireframe polygons with the correct scaling and distance. The textures may also be “lit” where the colors of pixels may be changed to reflect the presence of one or more lights in the scene. A framebuffer processor (the lower chip labeled 3DFX) takes the 3D scene with the texture and produces a 2D image that is built up in the framebuffer memory (the rest of those smaller, rectangular chips in the Voodoo 2) that can be sent to the monitor via the RAMDAC chip (like a D/A converter for video, it is labeled GENDAC on the Voodoo 2).

3DFX was the first company to produce really great 3D graphics chips for consumer consumption in PCs. Their first consumer product was the Voodoo 1 in late 1996. It was soon followed in 1998 by the Voodoo 2.

The Voodoo 2 is a PCI add-in board that does not replace the PC’s 2D graphics card. Instead, there’s a cable that goes from the 2D board to the Voodoo 2 and then the Voodoo 2 connectors to the monitor. This meant that the Voodoo 2 could not display 3D in a window, but what you really want it for is playing full-screen games, so it’s not much of a loss.

My friend who won the Metabyte Wicked 3D card later bought a Voodoo 3 card and sold me the Voodoo 2 sometime in 1999.

I finally had hardware acceleration. At the time we had a Compaq Presario that had begun life has a Pentium 100 and had been upgraded with an Overdrive processor to a Pentium MMX 200. It was getting a bit long in the tooth by this time, which was probably 1999. Previously I had made the mistake of buying a Matrox Mystique card with the hope of it improving how games looked and being bitterly disappointed in the results.

Having been a big fan of id Software’s Doom I had paid full price ($50) for their follow-up game Quake after having played the generous (quarter of the game) demo over and over again. Quake was by far my favorite game (and it’s still in my top 5).

id had known that Quake could look much better if it supported hardware acceleration. They had become frustrated with the way that the needed to modify Quake in order to support each brand of 3D card. Basically, the game needs to instruct the card on what it needs to do and each of card used a different set of commands. id had decided to create their own set of commands (called a miniGL because it was a subset of SGI’s OpenGL API) in the hope that 3D card makers would supply a library that would convert the miniGL commands into commands for their card. The version of Quake they created to use the miniGL was called GLQuake and it was available as a freely downloadable add-on.

It’s a little hard to show you this today, but this is what GLQuake (and the Voodoo 2) did for Quake. First, a screenshot of Quake without hardware acceleration (taken on from the Pentium III with a Voodoo 3 3000):

Pixels everywhere.

Now, with hardware accelerated GLQuake:

Suddenly the walls and floor look smooth and not blocky. Everything is much higher resolution. In motion everything is much fluid. It may seem underwhelming now, but this was very hot stuff in 1997 and blew me away when I first saw in 1999.

What we didn’t realize at the time was that it was pretty much all downhill for 3DFX after the Voodoo 2. After the Voodoo 2 3DFX decided to stop selling chips to 3rd party OEMs like Metabyte and Guilliemot and produce their own cards. That’s why my boxed board is just branded 3DFX. This turned out to be disastrous because suddenly they were competing with the very companies that had sold their very successful products in the 1996-1998 period. They also released the Voodoo 3, which combined 2D graphics hardware with 3D graphics hardware on a single chip (that was hidden under a heatsink).

The Voodoo 3 was an excellent card and I loved the Voodoo 3 3000 that was in the Dell Pentium III my parents bought to succeed the Presario. However, 3DFX was having to make excuses for features that the Voodoo 3 didn’t have and their competitors did have (namely 32-bit color). Nvidia’s TNT2 Ultra suddenly looked like a better card than the Voodoo 3.

3DFX was having trouble producing their successor to the Voodoo line and instead was having to adapt the old Voodoo technology to keep up. The Voodoo 4 and 5, which consisted of several updated Voodoo 3 chips working together on a single board ended up getting plastered by Nvidia’s GeForce 2 and finally GeForce 3 chips which accelerated even more parts of the graphics rendering process than 3DFX did. 3DFX ceased to exist by the end of 2000. Supposedly prototypes of “Rampage”, the successor to Voodoo were sitting on a workbench being debugged the day the company folded.

Back in the late-1990s 3D acceleration was a luxury meant for playing games. Today, that’s no longer true: 3D graphics hardware is an integral part of almost every computer.. Today every PC, every tablet, and every smartphone sold has some sort of 3D acceleration. 3DFX showed us the way.

VideoLabs ScholasticCam

This is my VideoLabs ScholasticCam desktop video camera from 1999, a sort of TV camera on a goose-neck mount that based on it’s name seems to have been intended for classroom use.

Oddball stuff like this is why I love the Village Thrift on State Road in Cuyahoga Falls. At other thrift stores you’re lucky to get a smattering of mundane electronics like old TVs and VCRs. At Village, they do have an electronics section but they also have another section that’s just a long set of shelves full off everything imaginable: Housewares, videogames, cookware, audiobooks, sporting goods, board games. medical supplies, etc. On a typical trip to Village Thrift my Dad and I will scrutinize these shelves several times because lost in the piles can be real gems.

The ScholasticCam is one of those things that you see out of the corner of your eye and think “What is that?”

When I spotted this thing I was hoping it was a web cam that magnified so that you could capture close up images of small objects on a PC. It turns out that it’s a bit too early for that. It’s actually a tiny TV camera attached to S-Video and Composite outputs.

The box implies that you can use it as a web cam but when you read the fine print it says in order to use it like that you need a video capture device on your computer, which they do not supply.

On the other side of the box it looks like VideoLabs sold a line of similar cameras for other purposes. I would love to have the model that attaches to a microscope.

At first it looks like the ScholasticCam does not have any obvious controls. The base has nothing whatsoever other than a connector for the power/video-out dongle and the VideoLabs logo.

There’s a power button and indicator light on the camera.

What’s not immediately obvious is how to focus the camera. See that cone-shaped thing at the bottom of the camera? That twists for focus. It feels a little too free and loose when it turns. I would prefer something a bit more smooth and firm.

One problem I noticed is that focusing the camera inevitably bumps it slightly out of position. You end up having to very delicately twist the focus while watching your video source to see how you’re doing. It seems like twisting in one direction and looking in another leads to more camera bumping.

To it’s credit, the ScholasticCam is built like a tank. The base is well weighted so that you can bend the neck very far without it having balance issues.

I was very curious to see what this camera’s pictures looked like on a computer. I don’t have any modern video capture equipment but I do have this Dazzle Digital Video Creator USB (circa 2000) that I found at the Midway Plaza Goodwill in Akron (it’s a pretty safe bet it will show up in a future blog entry).

The Dazzle, like many PC peripherals of it’s day, does not have drivers for modern versions of Windows (Vista, 7 and 8). I had to pull out the old Gateway Pentium 4 I used in college to fire up Windows XP and install the Dazzle’s drivers. I quickly discovered that with the Dazzle the image doesn’t update on screen at full speed. You can output to a TV and see a 30-fps view of what you are capturing, but I was too lazy to hook that up. So, making fine adjustments to the ScholasticCam while watching in 5-fps on the computer screen was a bit of a pain.

The Dazzle is sitting on top of the Gateway mini-tower and the ScholasticCam is sitting on the table with some objects I want to look at.

The first time I tried hooking up the ScholasticCam and the Dazzle to the Gateway it was at night and I quickly found that the lamp I had on in this room was not providing enough illumination for the ScholasticCam. So I improvised with a little LED light with legs.

The next day I moved into the living room so that I could try some images under daylight.

This 2012 Hawaii Volcanoes America the Beautiful Quarter presents a difficult target to image. Keep in mind that this is connected via S-Video (which is alright, but not great) through a low-grade video capture device and JPEG compressed. This is under the artificial lighting at night. As I said before, focusing was difficult because every time you focus you bump the camera.

There are a lot of strange color aberrations and much of the detail on the eruption was been lost.

This image taken with daylight is a little better. At least you can see the eruption.

One of the problems is that large focus cone ring on the ScholasticCam does not do you any favors for lighting. It would be better if it had a built-in lamp on the camera.

Here’s another coin, a 1964 Kennedy Half Dollar. First, under artificial lighting.

We see more of those color aberrations but the details are good. And now, under daylight.

The colors are bit better under daylight. But, the color fidelity on this camera, and the resolution it outputs at makes everything look like it’s being taken underwater by a submarine.

Something else I noticed is a spherical aberration in the lens. This TextelFX² chip is clearly supposed to be square.

This Intel 486 chip is also supposed to be square.

I spent some time trying to focus this image of the serial number on the bottom of this Apple Desktop Bus Mouse for some time and I thing I got a good example of what a properly focused image looks like.

Honestly, it seems like my iPad gets better images than this.

Now, it could be that without having the manual to this thing, I’m not giving the ScholasticCam a fair shake. It also could be that this camera is not intended for taking such close up images. But it seems like this well built and obviously highly engineered camera from the late-1990s has been thoroughly outclassed since then. It also reminds me that phone camera tech was producing images of similar quality to this just a few years ago. It makes you appreciate how far CCD technology has come.

Odds and Ends #2

I mentioned last week how much I loved going to the library as a child. These days rather than going to the library I tend to buy used books from thrift stores and used book stores.

I used to look at thrift store book sections with disdain because they were mostly filled with romance novels, out-of-date political books, self-help guides from the 70s, and other forms of useless drivel.

But, what I came to realize is that there’s always a diamond in the rough and considering how much rough thrift stores tend to have, the rate of finding diamonds is pretty high. The beauty of it is that because these books tend to be so cheap you can really indulge your curiosity without feeling like you’re throwing away money.

Sometimes I’ll buy a book because I know nothing about the subject matter.

Ekiben: The Art of the Japanese Box Lunch

I was at the Goodwill on State Road in Cuyahoga Falls recently when I found this 1989 coffee table book about Ekiben, the Japanese tradition of creating special Bento box lunches for sale at train stations so that people can eat them on the trains.

I can’t imagine a similar book about American airline food, can you?

Other times I will buy a book because I am very familiar with the subject matter or I’m collecting books on a specific subject. Ever since my parents bought me the Encyclopedia of Soviet Spacecraft as a child I’ve been interested in collecting books about spaceflight, including books by or about astronauts.

We Have Capture: Tom Stafford and the Space Race

I think I found this copy of We Have Capture, the autobiography of astronaut Tom Stafford (co-written with space writer Michael Cassutt) at the Waterloo Road Goodwill in Akron.

Among the Apollo astronauts Tom Stafford is somewhat forgotten because he didn’t walk on the Moon and until I read We Have Capture I didn’t realize how much of an impact Stafford had made. After flying on Gemini 6 and Gemini 9 , Stafford commanded the Apollo 10 mission, which was a dress rehearsal for Apollo 11. He and Gene Cernan descended in the Lunar Module to about 47,000 feet above the Moon’s surface before testing the Lunar Module’s ability to abort during landing.

However, the most interesting part of Stafford’s career came after the Moon landings. In 1971 was sent as a US representative to the funeral for the cosmonauts who died on the Soyuz 11 flight. Later he would command the Apollo-Soyuz Test Project (ASTP), the flight that is depicted in the jacket image. ASTP is somewhat forgotten today but in a historic moment of the Cold War in 1975 the final US Apollo flight docked with a Soviet Soyuz spacecraft in order to demonstrate international cooperation. What’s fascinating is that in the 25 years after ASTP Stafford continued to act as an adviser for NASA and helped to shepherd the Shuttle-Mir flights and the transformation of the failed Space Station Freedom project into the joint US-Russian-European-Japanese International Space Station project. In many ways the most interesting parts of the book have to do with Stafford’s techno-bureaucrat functions on that ground more than what he did in space.

Incidentally, I hope someday a space writer like Michael Cassutt, Andrew Chaikin or Dwayne Day writes a book-length history of the origins of the International Space Station (ISS). From what I understand there were some unique political, diplomatic, and engineering challenges that were overcome to create the ISS.

The best writer to tell that story may be William Burrows, author of books including Deep Black and Exploring Space.

Exploring Space: Voyages in the Solar System and Beyond

I found this copy of Exploring Space at the Waterloo Road Goodwill in Akron. This is a funny book because to look at the cover this looks like your standard “spaceflight is so great” kind of hagiography that’s common among books about spaceflight. In Exploring Space from 1990, Burrows actually takes a more critical approach.

I don’t think Burrows dislikes us spending money on exploring space. Rather, he’s unhappy, perhaps even disgusted with the way we’ve gone about doing it. The history of spaceflight is rife with good ideas that were poorly executed repeatedly before the engineers got them right (JPL’s early flights in the Pioneer, Mariner, Ranger, and Surveyor series) , good ideas that we spent way too much money on before they were finally executed right (Viking and Voyager) and questionable ideas that were forced to be realized because of political pressure (like the Space Shuttle). The bizarre way that we fund spaceflight through political kabuki lends itself to these kinds of costly messes. I suspect that if Burrows were writing Exploring Space today he would be more sympathetic to NASA’s cost controlled Discovery program, very unhappy with the James Webb Space Telescope, and seething with rage about the forthcoming SLS launch vehicle.

An interesting example of when spaceflight vision and reality collide is well illustrated by…

Challenge of the Stars: A Forecast of the Future Exploration of the Universe

This thin coffee-table sized volume is another book I found at the Waterloo Road Goodwill. I remember that I spotted it right after one of the book’s authors, the English astronomer and television presenter Patrick Moore, had died late last year.

Much like The Compact Disc Book, I mentioned last week, the fun of Challenge of the Stars is seeing if what they predicted would occur that has occurred and what has not occurred. One thing they got right was the “Grand Tour” of the solar system that became the Voyager 1 and 2 probes.

This stunning illustration of a proposed docking between a Soviet Soyuz and the US’s Skylab space station (note the Apollo CSM waiting in the distance). This idea was turned down in favor of the Apollo-Soyuz Test project flight that Tom Stafford flew.

What really caught my eye though, was the section on space stations and a manned Mars landing.

On the bottom left is one of the earlier proposals for the Space Shuttle. Rather than the External Tank and Solid Rocket Boosters we bacame so fami,iar with, this earlier proposal used a liquid-fueled booster that would fly back to the launch site and land rather than being discarded like the External Tank.

The real prize though, is the photo on the opposite page. Here’s a closer view.

Other than the fact that this is a beautiful piece of art, there’s quite a bit of political history attached to this image. This was produced for a study that Von Braun’s group at Marshall Spaceflight Center conducted in 1969 about what to do after Apollo.

That blunt-nosed craft in the middle of the image with the three cylinders with the USA insignia on them are Von Braun’s idea for a manned-Mars exploration ship. The three USA-labeled cylinders are actually nuclear powered rockets. Here a space shuttle is delivering a fuel shipment to the craft while it’s being assembled in orbit nearby a space station. What you’re seeing envisioned here would have taken dozens of Saturn V launches to get into orbit.

On a later page is an illustration of what the Mars Excursion Module, Von Braun’s Mars lander, would have looked like sitting on Mars. Note that it’s basically a giant-sized Apollo command module.

The excellent False Steps blog goes into more detail but essentially this outrageously expensive proposal was laughed out of the room in Washington. One of the reasons we got the Space Shuttle after Apollo was that the Space Shuttle was seen as more cost effective than Apollo, and into this atmosphere NASA’s spacecraft designers at Marshall were tilting at windmills rather than proposing a more cost-effective alternative to the Shuttle.

It’s fascinating to imagine what might have been though, had Von Braun’s Mars mission proposal been accepted by Nixon. In fact…

Voyage

Voyage, by Stephen Baxter is a science fiction novel that explores an alternate history where a version of Von Braun’s proposal was actually carried out and the United States landed on Mars in 1986.

I believe I found this paperback at Last Exit Books in Kent.

Voyage is a real treat for spaceflight fans because it goes into immense detail about the trials and tribulations of the political squabbling, engineering feats, test flight mishaps, and other nerd candy that lead up to the Mars landing. Clearly Baxter studied the various Mars mission proposals from the late-1960s and early 1970s carefully because many of the details from Von Braun’s plan, like upgrade versions of the Saturn V and the NERVA nuclear rocket project make their way into Voyage. He also takes cues from real life as well. For example, rather than the Challenger disaster, a gruesome mishap occurs with on a NERVA rocket test flight. Rather than the ASTP mission flying, the Soviets are invited to a US Skylab-style station orbiting the Moon. If you’re a space nerd at all, Voyage is going to be right up your alley.

Sometimes I stumble onto neat space memorabilia in unexpected places.

Atlas V AV-003 Interactive DVD

I was at the Kent/Ravenna Goodwill a few weeks ago browsing at the DVDs and suddenly I see a DVD that says Atlas V AV-003 on the side.

I expect to see Atlas V rocket serial numbers the on the NASASpaceflight.com forums, not on something at Goodwill.

The Atlas V is a launch vehicle originally developed by Lockheed Martin and currently built and operated by the United Launch Alliance. You might remember the original Atlas rocket that began as an ICBM in the 1950s, flew astronauts during the Mercury program in the early 1960s, and became a workhorse for launching satellites and space probes well into the 1990s. Since then, the Atlas name has become a sort of brand name for the Atlas rocket family. The current Atlas V has design heritage that goes back to the Titan and Atlas-Centaur rockets and uses a first stage booster engine built by the Russians.

This is the Atlas V AV-003 Interactive DVD. AV-003 refers to the serial number of the rocket, so this DVD documents the launch of the third Atlas V in 2003.

At first I was a bit disappointed in this DVD because it seemed to be full of standard marketing video drivel and over-produced launch video crud. That is until I found the menu where they let you watch every camera that was covering the launch. There are the cameras you expect to see: cameras on the pad and tracking cameras that track the rocket from afar.

But then there are cameras mounted on the first and second stages. I’ve seen these used on launch videos before, but I had never had the chance to just watch the raw footage with no commentary or editing.

Here is a view on the first stage looking downward as one of the solid rocket boosters separates.

And there it goes tumbling away.

This camera is looking upward as the payload fairing (aka the nose cone) separates after the rocket has gotten far enough out of the atmosphere that it can shed the weight of the fairing.

This is from the same camera looking upwards after the first stage has shut down and the second stage, a Centaur upper-stage, has started and speeds away from the dead booster.

I have no idea how a DVD like this made it’s way to the Kent Goodwill, but it made my day when I found it.

SNK NeoGeo Pocket Color

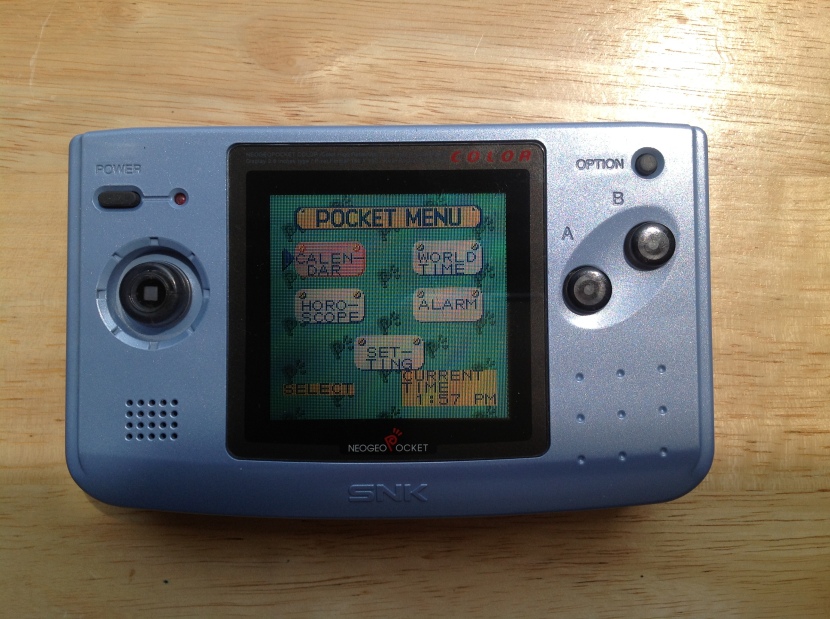

This is my NeoGeo Pocket Color (NGPC), a short-lived handheld videogame system that the somewhat esoteric Japanese company SNK sold from 1999-2001.

I believe I found it at Village Thrift sometime well after the system was discontinued in the United States in 2000.

Village Thrift has a good habit of taking a lot of items that go together, like a NGPC and several games, and putting them together in a clear plastic bag. I remember finding it at their showcase, rather than the electronics section.

I’m also not sure if the scratch on the screen was there when I bought it or if that happened later.

The games that were in that plastic bag along with the NGPC were:

Sonic The Hedgehog Pocket Adventure, a Sonic the Hedgehog platformer:

Bust-A-Move Pocket, an entry in the well-known puzzle game series that resembles Bejeweled:

Baseball Stars Color, a fairly straightforward baseball game:

Neo Dragon’s Wild, a collection of “casino” games:

Metal Slug 1st Mission, a portable version of SNK’s Rambo-like side scrolling platforming shooter:

And finally Metal Slug 2nd Mission, the sequel to Metal Slug 1st Mission:

Oddly enough, there were no fighting games in that lot because that’s what SNK was known for and what enthusiasts wanted the NGPC for.

There are several neat and interesting things about the NeoGeo Pocket Color hardware. The first is that it has this tiny spec sheet for the display written above the screen.

You know, in case you ever need to look up it’s pixel pitch.

The screen on the NGPC can be difficult to see in all but direct sunlight and it can also be a pain to photograph. So, if my screenshots look odd, that’s why.

The need for very bright light in order to see the colors well was a problem with a lot of the color portable systems of this time period. I fondly remember the uncomfortable position I had to sit in to play Tetris DX on my purple Game Boy Color while hunched below a reading light that was attached to my bed. Penny Arcade memorably poked fun of the difficult to see screen on the Game Boy Advance in 2001. The rise of back-lit screens like the one on my Game Boy Micro finally alleviated this problem.

The unfortunate thing is that when you see screenshots of games from the NGPC and Game Boy Color from emulators you realize what beautiful colors those games were putting out and how the hardware made them almost impossible to see.

The NGPC has a unique 8-way joypad located on the left side of the console. This was the system’s most memorable feature and one that people would be wishing for on other portable game systems for years afterword.

Unlike the control stick you might see on an XBox controller this is a digital control pad, rather than an analog one. However, because it can move in eight directions it’s easier to point in a diagonal direction than a conventional cross-shaped D-pad like you find on Nintendo systems. SNK specialized in 2D arcade games that had sophisticated joysticks so it’s no surprise to find something like this on their portable system. The joypad has a really solid feel and makes a lovely clicking sound as you move it around.

On the back the NGPC curiously has two battery covers. The system uses two AA batteries to power the system and one CR2032 button battery to backup memory to save games and settings.

If the CR2032 dies you get this lovely warning message when you turn off the console.

When you turn on the NGPC the boot screen has an attractive little animation accompanied by a cute little tune.

If you turn on the NGPC without a cartridge you get this menu screen that includes a calendar and (in silly Japanese fashion) a horoscope.

I can’t think of a videogame feature more utterly useless than a horoscope.

In order to explain the NeoGeo Pocket Color I have to tie together a few threads.

SNK is not what you would call a household name in the US but it was one of the titans of the Japanese arcade in the 1980s and 1990s. Their specialty was (and still is) was 2D fighting games. That is to say that the graphics are hand-drawn sprites that view the action from the side.

In 1990 SNK released the NeoGeo home console which for all intents and purposes was one of their arcade machines repackaged for home use. As you would expect it was outrageously expensive. The console itself (including a pack-in game) was $650 and games cost $200.

If you owned a Sega Genesis or a Nintendo SNES the NeoGeo must have seemed like some sort of mystical Shangri-La.

If you purchases a NeoGeo what you would have gotten for your obscene amount of money were perfect arcade games in the home. From the beginning of the home console market the fidelity of home console conversions of arcade games had been a constant problem. There were always compromises in animation, music, fluidity, and control sensitivity. Not so, if you were a NeoGeo owner. What you got was perfection.

But in the mid-1990s the market moved on to consoles such as the PlayStation and N64 that were built around 3D graphics. The arcades moved on too, and fighting games like the Tekken and Virtua Fighter series that were built on 3D graphics excited the public’s imagination.

SNK’s contemporaries like Capcom (whose Street Fighter series is one of the pillars of the 2D fighting genre) found ways to stay relevant by developing new series like Resident Evil while continuing their line of 2D fighting games. SNK didn’t and the NeoGeo became an expensive collector’s item.

Then, something possessed SNK to release a monochrome portable system called the NeoGeo Pocket in 1998 followed by the NeoGeo Pocket Color in 1999.

1999, when the NGPC came out, was a very strange time for portable videogames because it’s when the technological gap between the home consoles and portable consoles was at it’s peak. It wasn’t so much a technological gap as it was a chasm.

It’s funny how technology trends wax and wane over decades. Today, vast sums of R&D dollars are being spent on making components (especially CPUs and GPUs) for portable electronics faster and more power efficient. Intel’s new (Jone 2013) Haswell processors have marginal speed gains for desktop users but offer better battery life and less heat for mobile users. Across the industry from Apple to Samsung the effort is going into making better mobile devices.

Back in 1999, everything was different. Back then the money was being put into chips for devices like PCs and home game consoles that were plugged into the wall. We wanted faster devices and didn’t much care how much power they used or how much heat they put out.

In the home console market, the Dreamcast had just debuted, ushering in an era of more more refined 3D graphics that would lead to the PS2, XBox, and GameCube.

The Dreamcast was powered by a 32-bit Hitachi SH-4 RISC CPU and a PowerVR GPU that’s the direct ancestor of the GPU in today’s iPad and PS Vita.

Meanwhile in the portable realm the popular Game Boy Color was still based on an 8-bit CPU, technology that was solidly rooted in the game consoles of the 1980s. Very roughly this meant that the state-of-the-art home console was at least several hundred times more powerful than the leading portable console.

Battery life was the root cause of this gap. You could build a much more powerful portable system with a much more powerful CPU, much more RAM, and a higher resolution back-lit screen. But, it would have been heavy and had horrendous battery life. The marketplace thrashings that the Atari Lynx, the NEC TurboExpress, the Sega Game Gear, and the Sega Genesis Nomad received at the hands of the Game Boy throughout the 1990s were all clear proof of this reality.

Essentially those systems had tried to be to the Game Boy what the NeoGeo had been to the SNES and the Genesis, a far more powerful, far more expensive competitor. But, there was no place for that in the portable market.

The breakthroughs that would happen in the mid-2000s that allowed the revolution in portable computing devices simply had not occurred yet.